FDQM scripting uses vbScript so scripting features like file access are available as well as ADODB to make database connections and queries. FDQM also provides its own API libraries to access native features such as the current POV. These are available via the objects API (application programming interface), DW (Data Window for database access) and RES (FDQM resources). These can all be seen in the Object Browser in FDM Workbench.

In our source systems the data is stored with fields that identify the period and year. The RES.PstrPer object returns the period currently selected in FDQM. This is the value in the Text Description field in the Control Table for Periods

To access the period and year we take appropriate substrings of the RES.PstrPer value.

Dim StrPer 'Uses the Date POV to find the current period

Dim StrYr 'Uses the Date POV to find the current year

strPer = Left(RES.PstrPer,3) 'Retrieve the period from the POV

strYr = Right(RES.PstrPer,4) 'Retrieve the year from the POV

Dim StrYr 'Uses the Date POV to find the current year

strPer = Left(RES.PstrPer,3) 'Retrieve the period from the POV

strYr = Right(RES.PstrPer,4) 'Retrieve the year from the POV

If the periods are referenced by number instead of name there are a variety of ways to accomplish the translation. I like finding the position of the period in the full list of periods then doing some math.

' The strAllPers contains the abbreviations of all the periods

' Search for the position of the current period, subtract 1, divide by 4 and add 1

' So for Jan we get ((1-1=0)/4=0)+1 = 1

' for Feb we get ((5-1=4)/4=1)+1 = 2

' etc.

' for Dec we get ((45-1=44)/4=11)+1 = 12

'

Dim strPerNum ' Numeric value of period

Dim strAllPers ' All periods for use in index

strAllPers = "Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec"

strPerNum = ((InStr(strAllPers,strPer)-1)/4)+1

' Search for the position of the current period, subtract 1, divide by 4 and add 1

' So for Jan we get ((1-1=0)/4=0)+1 = 1

' for Feb we get ((5-1=4)/4=1)+1 = 2

' etc.

' for Dec we get ((45-1=44)/4=11)+1 = 12

'

Dim strPerNum ' Numeric value of period

Dim strAllPers ' All periods for use in index

strAllPers = "Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec"

strPerNum = ((InStr(strAllPers,strPer)-1)/4)+1

Problem

For one of our ERP systems we have to provide the dates for the start and end of the period to a SQL stored procedure. The RES.PdtePerKey retrieves the end date for the period which is the Period field in the Periods Control Table as shown in the previous screen shot. Then use the vbScript DateAdd function to calculate the start date of the period.

Dim dtePerKey ' Last date of the current POV period

dtePerKey = RES.PdtePerKey

Dim dteStart ' Start date of the current POV period

dteStart = DateAdd("d",1,DateAdd("m", -1, dtePerKey))

dtePerKey = RES.PdtePerKey

Dim dteStart ' Start date of the current POV period

dteStart = DateAdd("d",1,DateAdd("m", -1, dtePerKey))

For Sep - 2016 we get 9/30/16 as dtePerKey. We subtract one month to get the last day of August, then add one day to get the first day of September. Note that the RES.PdtePerKey returns a date value, not a string, so use appropriate conversion functions as needed.

Our problem came when we converted from calendar year periods to 4-4-5 fiscal periods. This means we can't guarantee that the period end date is the last day of the month or the period start date is the first of the month. What's a mother to do?

First we configure the correct period end dates for our fiscal periods in the Periods control table. Define the period end date and the prior period end date along with the target period and year.

In the Workbench Client there are a number of Accelerators. Under the section Point-Of-View Information there is an accelerator named Get Period Prior Date Key.

Sweet. In the FDQM Periods control table we define the correct end dates for the periods, use the accelerator to get the prior period end date, then add one day to get our period start date. Double-clicking the accelerator adds the following code to your script.

'Declare Local Variables

Dim dtePriorPeriodKey

'Get prior period date key

dtePriorPeriodKey = API.POVMgr.fPeriodKey(API.POVMgr.PPOVPeriod, True).dteDateKey

Dim dtePriorPeriodKey

'Get prior period date key

dtePriorPeriodKey = API.POVMgr.fPeriodKey(API.POVMgr.PPOVPeriod, True).dteDateKey

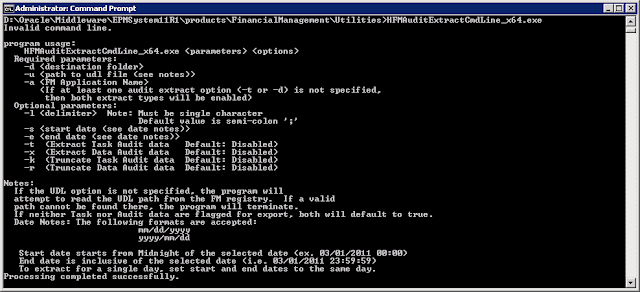

Easy-peasy. But when you run the script you get:

|

| Error: An error occurred importing the file. Detail: Object required: 'API' |

Ain't that a kick in the knickers? It turns out you can only use the calls to API objects in data pump scripts, not integration scripts.

Fortunately we have standard vbScript features available including ADODB which allows access to databases. Any databases. Even our FDQM database. FDQM uses the tPOVPeriod table to store the period data. We can use ADODB to make a connection to the FDQM database, query the tPOVPeriod table for the period in our POV, get the PriorPeriodKey value, then add one day.

The code I use which includes some error handling looks like this:

' Find the start date of the period. We can't use the API in the import scripts

' The tPOVPeriod table in the FDQM database has the period information which includes

' the prior period end date. So we make another ADODB connection to that database

' and find the record for this period.

' Get the PriorPeriodKey field from the result set and add one day

'

Dim fdmSS ' Connection to FDQM database

Dim fdmRs ' Records returned from query

Set fdmSS = CreateObject("ADODB.Connection")

Set fdmRs = CreateObject("ADODB.Recordset")

fdmSS.Open "Provider=SQLOLEDB.1;Integrated Security=SSPI;Persist Security Info=False;Initial Catalog=FDQM;Data Source=MySQLServer;"

' The query should return one record

'

Dim fdmQ

fdmQ = "SELECT * FROM tPOVPeriod where PeriodDesc = '" & RES.PstrPer & "'"

fdmRS.Open fdmQ, fdmSS

' If we get no records then something is broken

'

If fdmRS.bof And fdmRS.eof Then

RES.PstrActionValue = "No Start date found"

Import_Script=False

Exit Function

Else

Dim dtePriPer ' Prior period end date as datetime value

dtePriPer = fdmRS.Fields("PriorPeriodKey").Value

dteStart = DateAdd("d",1,dtePriPer) ' Add 1 day to get first day of current period

End If

' The tPOVPeriod table in the FDQM database has the period information which includes

' the prior period end date. So we make another ADODB connection to that database

' and find the record for this period.

' Get the PriorPeriodKey field from the result set and add one day

'

Dim fdmSS ' Connection to FDQM database

Dim fdmRs ' Records returned from query

Set fdmSS = CreateObject("ADODB.Connection")

Set fdmRs = CreateObject("ADODB.Recordset")

fdmSS.Open "Provider=SQLOLEDB.1;Integrated Security=SSPI;Persist Security Info=False;Initial Catalog=FDQM;Data Source=MySQLServer;"

' The query should return one record

'

Dim fdmQ

fdmQ = "SELECT * FROM tPOVPeriod where PeriodDesc = '" & RES.PstrPer & "'"

fdmRS.Open fdmQ, fdmSS

' If we get no records then something is broken

'

If fdmRS.bof And fdmRS.eof Then

RES.PstrActionValue = "No Start date found"

Import_Script=False

Exit Function

Else

Dim dtePriPer ' Prior period end date as datetime value

dtePriPer = fdmRS.Fields("PriorPeriodKey").Value

dteStart = DateAdd("d",1,dtePriPer) ' Add 1 day to get first day of current period

End If

While I used this technique to get to the prior period date we can get any fields we want from any FDQM tables.

- tPOVPeriod has the period control table data

- tPOVCategory has the Categories control table data

- tCtrlCurrency has the Currency control table data

- tPOVPartition has the partition/location data

- tDataMap has the mapping tables for all partitions and dimensions

- tLogActivity has the process and error logging data

- tSecUser lists all provisioned users and their security level

- tSecUserPartition lists all users, their provisioned partitions, and default partition

I can't think of a good reason why you would want to get to some of this data in an import script, but it is available if you need it.