perName = fdmContext("PERIODNAME")

The ability to query the context of the current rule provides a lot of power and flexibilty in your Jython scripts. But the keys for the fdmContext dictionary are not well documented. If you search the web by binggoogling you only see a handful of the possible values scattered across various blog pages.

Francisco Amores has an excellent blog that focuses on FDMEE. This post shows sample code to extract all of the keys and values from the dictionary. I used this in the BefImport script to dump the values to the debug log at the start of processing. Be sure to set the log level to 5 so the debug information gets logged. You can also use the Jython file IO functions to open, write, and close a file to export the list to any convenient location.

The goal for this post is to show all the possible context codes and describe their use and origin. For brevity's sake I will refer to the workspace Navigate > Administer > Data Management tab as FDMEE in the following descriptions.

Directory keys

| APPROOTDIR | The root directory for the FDMEE application. Inbox, outbox, data, and scripts directorys are below this root. This is defined in the System Settings section of FDMEE |

| BATCHSCRIPTDIR | The directory on the FDMEE server that is created at install and holds utility scripts including encryptpassword, importmapping, loaddata, loadmetada, regeneratescenario, runbatch, and runreport. |

| EPMORACLEHOME | The EPMSystem11R1 directory |

| EPMORACLEINSTANCEHOME | The EPM instance inside the user_projects directory. These locations are defined during the install and configuration of FDMEE. |

| INBOXDIR | The default location for importing data files |

| OUTBOXDIR | The default location for the .dat, .err, and .drl files created during data loads. Also has the logs directory which has the status logs that can be handy for troubleshooting errors. |

| SCRIPTSDIR | Where the scripts are saved. The last three locations are created when you click the Create Application Folders button under System Settings in FDMEE. |

Source Keys

These keys reference the AIF_SOURCE_SYSTEMS table in the FDMEE database and most of the values are defined in Data Management > Setup > Source System.

| SOURCEID | The SOURCE_SYSTEM_ID field which is a sequential index value |

| SOURCENAME | The name of the source system. |

| SOURCETYPE | The type of the source system such as File, SAP, MySQL, etc. |

Target keys

These keys reference the AIF_TARGET_APPLICATIONS table in the FDMEE database with most of the values defined in Data Management > Setup > Target Application.

| APPID | The ID of the target application. This is the sequential integer APPLICATION_ID key in the AIF_TARGET_APPLICATIONS table |

| TARGETAPPDB | The database for the target application if loading to Essbase |

| TARGETAPPNAME | The name of the target application. |

| TARGETAPPTYPE | The type of application such as Planning, Essbase, Financial Management, etc. |

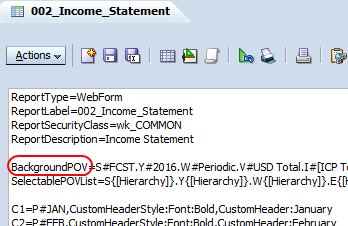

POV Keys

These are values defined in the Point of View on the Workflow tab.

| CATKEY | The CATKEY field in the TPOVCATEGORY table. A sequential primary key for the table |

| CATNAME | The CATNAME field names the category as defined in the Category Mapping section of FDMEE. |

| LOCKEY | The PARTITIONKEY field in the TPOVPARTITION table. This is a sequential integer that is the primary key for the table |

| LOCNAME | The Name as defined in the Location section of FDMEE. It is held in the PARTNAME field in the TPOVPARTITION table. |

| PERIODKEY | The full date of the period in the POV. This is the PERIODKEY field which is the primary key in the TPOVPERIOD. |

| PERIODNAME | The Period Name as defined in the Period Mapping section of FDMEE. This is the PERIODDESC field in the TPOVPERIOD table. |

| RULEID | The RULE_ID in the AIF_BALANCE_RULES table. It is a sequential integer that is the primary key for the table |

| RULENAME | The name of the rule as defined in the Workflow > Data Load Rule section of FDMEE. It is held in the RULE_NAME field in the AIF_BALANCE_RULES table. |

Data Load keys

These values are generated when the load rule runs. The values come from the AIF_BAL_RULE_LOADS table.

| LOADID | The LOADID field which is a sequential primary key in the AIF_BAL_RULE_LOADS table. For loads that don't come from text files this is the p_period_id variable in ODI. |

| EXPORTFLAG | The value is Y or N depending on whether or not we are exporting to the target. The value is in the EXPORT_TO_TARGET_FLAG field. |

| EXPORTMODE | This is the type of export which can be Replace, Accumulate, Merge, or Replace By Security. The value is in the EXPORT_MODE field |

| FILEDIR | When loading from a file this is the directory beneath APPROOTDIR where it is found. When not doing a file load this value is None and there is no FILENAME key. The value is in the FILE_PATH field |

| FILENAME | The name of the data file in FILEDIR and held in the FILE_NAME_STATIC field. The full path to the data load file would be APPROOTDIR/FILEDIR/FILENAME |

| IMPORTFLAG | The values is Y or N depending on whether or not we are importing from the source. This might be N if we are working in the Data Load Workbench and did and import, then corrected some validations, then as a follow up step we did the Export. The value is in the IMPORT_FROM_SOURCE_FLAG |

| IMPORTFORMAT | This is the name of the Import Format configured in the Setup tab of FDMEE. The value is in the IMPGROUPKEY field. |

| IMPORTMODE | I have no idea what this field is or what it does. In all my testing this field had the value of None. Maybe this is a place holder for something planned in the future? |

| MULTIPERIODLOAD | This is Y if you are loading more than one period. You would do this from the dialog displayed when you click the Execute button on the Data Load Rule tab. There isn't a field for this in the AIF_BAL_RULE_LOADS table so I suspect it is set to Y if START_PERIODKEY is not equal to END_PERIOD_KEY |

| USERLOCALE | The locale for the current user. This is used to translate prompts into other languages. I can't find this in any of the tables so I suspect it is pulled directly from workspace. |

| USERNAME | The username of the person running the data load. This isn't held in any of the AIF_BAL_RULE_xxx tables so this may be pulled from workspace. |

This is most of the information but I am missing a few things such as the source of the USERNAME key. If you have information to add please replay in the comments below to assist your fellow travelers.