We used to get reports from users in the field that somebody moved their cheese. Fingers were pointed, accusations made, email storms erupted, tsunamis demolished fragile shore lines, meteors collided in the stratosphere, all cascading into a cacophony of dissonance that gradually decrecendoed until the next crisis wherein the entire process repeated. The decision was made to enable data auditing in HFM to prevent such catastrophes.

The good thing about data auditing in HFM is that it records every transaction including data entry, data loads, data clears and data copies. The bad thing about data auditing in HFM is that it records every transaction, so unless you are surgical about what gets audited you can generate an enormous log.

Data auditing is configured at the intersection Scenario and Account. For Scenarios the field Enable Data Audit has to be set to Y (yes) or O (override) instead of the default of N. Y indicates that everything in that scenario gets audited, O indicates the auditing is optional and will defer to the configuration of the Account dimension. Unless you have a small Account dimension you should configure the audited scenarios with the O. Only audit scenarios where users make changes.

For the Accounts dimension change the Enable Data Audit field to Y for the accounts to be audited in Scenarios configured with O. Again, only audit accounts where users make changes. There is no value to auditing accounts that are calculated. FDQM it has its own audit trail so if you use that to load data you should try to omit accounts that FDQM loads and just audit accounts where users manually submit or clear data. In our case we knew there were specific areas that generated problems so we focused on accounts in those areas.

The audit data can be viewed in workspace by selecting Navigate > Administer > Consolidation Administration > Audit > Data Audit. The resulting data can be filtered by any of the POV fields. The data grid will show who did it, what they did, when they did it, where they did it, the POV of the intersection touched, and the value assigned.

The data audit details are in the table <AppName>_DataAudit. The HFM administrators guide advises that the number of records in the table be less than 500,000 to avoid degrading performance. For a large company half a million records can be added in an afternoon of data loads during month end close.

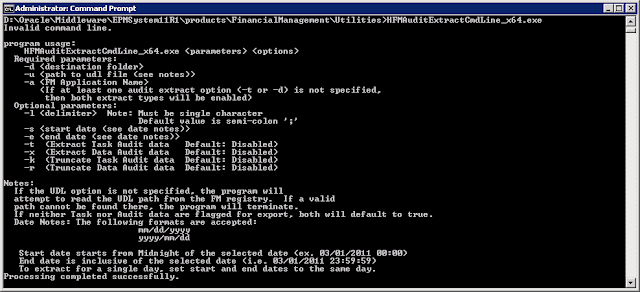

The audit data can be exported and truncated from the Consolidation Administration tab. But a better solution is to find an automated way of exporting and truncating the table. In the EPMSystem11R1\products\FinancialManagement\Utilities folder is the command line utility HFMAuditExtractCmdLine.exe. This utility has switches to truncate and/or extract the data and task audit logs for a consolidation application. Data can be extracted or truncated within a specified date range and extracted to any available location. Note that the utility requires a Universal Data Link (.udl) file which must be configured with the connection string to the HFM database along with credentials that have at least db_datawriter access to the database.

The command line to extract the data is pretty straightforward. Assign the destination folder, full path to the .udl file, specify the application, delimiter, start, and end dates, and the switches for which operations to perform. One quirk I found, at least with the 64-bit version of the utility for 11.1.2.2.500, is that -k and -r switches both export and truncate the data instead of just truncating.

HFMAuditExtractCmdLine_x64 -d c:\Extracts -u C:\MyHFM.udl -a Comma -s 2017/01/01 -e 2017/02/29 -r

This example command will extract the data to the C:\Extracts folder, use the C:\MyHFM.udl file to connect to the HFM database and the Comma application, then extract and truncate all of the data audit records recorded between January an February of 2017. Note that in the example I'm using the default delimiter which is the semicolon. If you specify a delimiter it must be a printable character since there is a known issue with the command line utility which can't process [Tab] as a delimiter.

The command line utility is available up to version 11.1.2.3, but is not bundled with version 11.1.2.4 and above. However the format of the table has not changed between versions so the utility will still work. Oracle patch 9976978 can be downloaded to get an older version of the utility. You can also get the latest PSU for a previous version which will have a copy of the utility in the files\products\FinancialManagement\Utilities folder.

The utility can be configured in a batch file or run directly from the Task Scheduler. But we still need to adjust the end date. In the next post I will detail sample code that exports the data to a .csv file, adjusting for the date, and imports that .csv file into a database. I use this as a nightly scheduled process to keep the HFM database lean and preserve the audit data for forensic examination.